大佬,发布到手机上后,websocket无法连接。手机和服务器在同一局域网,设备确定可以互通。DevEco Studio只能run,不能debug,debug后APP只显示个图标,不能正常启动。另外为什么只能是30帧呢?

知道了,Colyseus不支持鸿蒙,是不是就不能基于此框架开发多人联机游戏了。看来我只能换别的项目或者放弃比赛了  。

。

这个设备是开发版,硬件是入门级,游戏性能会比较差,锁帧会流畅点

大佬,我做的参赛联机游戏是用Colyseus,这个框架不支持鸿蒙系统,是不是就没办法了。

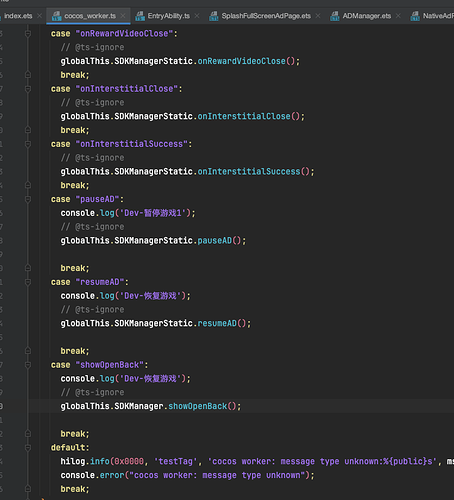

想请教下cocos和鸿蒙的交互怎么做,我现在用globalthis.oh.postMessage(“xxx”,“xxx”)发送给鸿蒙可以了,我想让鸿蒙返回消息给cocos这边该怎么处理,在cocos_worker那个脚本里做可以吗?

非常感谢您的回答!

这是方舟的,v8的我也不清楚0.0

大佬,在不在,麻烦问下v8打出来的openharmony项目,鸿蒙调用creator这边应该咋写

用方舟打出来的openharmony项目在鸿蒙手机5.0.0.110版本会闪退,报一个 LastFatalMessage:Assertion failed: isObject() (/Applications/Cocos/Creator/2.4.13/CocosCreator.app/Contents/Resources/cocos2d-x/cocos/scripting/js-bindings/jswrapper/Value.cpp: toObject: 583)

也是类似的走worker

export class ccAsr {

private uiPort: PortProxy;

constructor() {

this.uiPort = globalThis.uiPort;

this.uiPort.on('onASR', (text) => {

console.log("cocos info onASR"+text)

globalThis.startMic(text);

});

this.uiPort.on('endASR', (text) => {

globalThis.endMic(text);

console.log("cocos info endASR"+text)

});

}

startSpeech() {

if(this.uiPort) {

this.uiPort.postMessage('startSpeech', '');

}

}

endSpeech() {

if(this.uiPort) {

this.uiPort.postMessage('endSpeech', '');

}

}

}

export function initAsr() {

globalThis.asr = new ccAsr();

}

import { speechRecognizer } from '@kit.CoreSpeechKit';

import { BusinessError } from '@kit.BasicServicesKit';

import abilityAccessCtrl, { Permissions } from '@ohos.abilityAccessCtrl';

import { bundleManager, common } from '@kit.AbilityKit';

import { PortProxy } from '../common/PortProxy';

const permissions: Array<Permissions> = ['ohos.permission.MICROPHONE'];

async function checkAccessToken(permission: Permissions): Promise<abilityAccessCtrl.GrantStatus> {

let atManager: abilityAccessCtrl.AtManager = abilityAccessCtrl.createAtManager();

let grantStatus: abilityAccessCtrl.GrantStatus = abilityAccessCtrl.GrantStatus.PERMISSION_DENIED;

// 获取应用程序的accessTokenID

let tokenId: number = 0;

try {

let bundleInfo: bundleManager.BundleInfo = await bundleManager.getBundleInfoForSelf(bundleManager.BundleFlag.GET_BUNDLE_INFO_WITH_APPLICATION);

let appInfo: bundleManager.ApplicationInfo = bundleInfo.appInfo;

tokenId = appInfo.accessTokenId;

} catch (error) {

const err: BusinessError = error as BusinessError;

console.error(`Failed to get bundle info for self. Code is ${err.code}, message is ${err.message}`);

}

// 校验应用是否被授予权限

try {

grantStatus = await atManager.checkAccessToken(tokenId, permission);

} catch (error) {

const err: BusinessError = error as BusinessError;

console.error(`Failed to check access token. Code is ${err.code}, message is ${err.message}`);

}

return grantStatus;

}

export class asrCtrl{

private asrEngine: speechRecognizer.SpeechRecognitionEngine = null!

extraParams: Record<string, Object> = { "locate": "CN", "recognizerMode": "short" }

private _id = 0;

private _workPort: PortProxy;

private _context: common.UIAbilityContext;

constructor(port: PortProxy) {

this._workPort = port;

console.log("cocos info initted asr")

this._workPort.on('startSpeech', () => {

this.startSpeech();

});

this._workPort.on('endSpeech', () => {

this.onEnd();

});

this.init();

}

init(){

if(this.asrEngine) return;

let initParamsInfo: speechRecognizer.CreateEngineParams = {

language: 'zh-CN',

online: 1,

extraParams: this.extraParams

};

let port = this._workPort;

// 创建引擎实例相关参数

speechRecognizer.createEngine(initParamsInfo, (err: BusinessError, speechRecognitionEngine:

speechRecognizer.SpeechRecognitionEngine) => {

if (!err) {

// 接收创建的引擎实例

this.asrEngine = speechRecognitionEngine;

let setListener: speechRecognizer.RecognitionListener = {

// 开始识别成功回调

onStart(sessionId: string, eventMessage: string) {

// console.info("asr onStart sessionId: " + sessionId + "eventMessage: " + eventMessage);

},

// 事件回调

onEvent(sessionId: string, eventCode: number, eventMessage: string) {

// console.info("asr onEvent sessionId: " + sessionId + "eventCode: " + eventCode + "eventMessage: " + eventMessage);

},

// 识别结果回调,包括中间结果和最终结果

onResult(sessionId: string, result: speechRecognizer.SpeechRecognitionResult) {

console.info("cocos info rec asr: " + result.result)

if(result.isFinal){

port.emit('endASR',result.result);

}else{

port.emit('onASR',result.result);

}

},

// 识别完成回调

onComplete(sessionId: string, eventMessage: string) {

console.info("asr onComplete sessionId: " + sessionId + "eventMessage: " + eventMessage);

},

// 错误回调,错误码通过本方法返回

// 返回错误码1002200002,开始识别失败,重复启动startListening方法时触发

// 更多错误码请参考错误码参考

onError(sessionId: string, errorCode: number, errorMessage: string) {

// console.error("asr onError sessionId: " + sessionId + "errorCode: " + errorCode + "errorMessage: " + errorMessage);

},

}

// 设置回调

this.asrEngine.setListener(setListener);

} else {

// 无法创建引擎时返回错误码1002200001,原因:语种不支持、模式不支持、初始化超时、资源不存在等导致创建引擎失败

// 无法创建引擎时返回错误码1002200006,原因:引擎正在忙碌中,一般多个应用同时调用语音识别引擎时触发

// 无法创建引擎时返回错误码1002200008,原因:引擎正在销毁中

console.error("errCode: " + err.code + " errMessage: " + err.message);

}

});

}

setContext(context: common.UIAbilityContext) {

this._context = context;

}

reqPermissionsFromUser(permissions: Array<Permissions>, context: common.UIAbilityContext): void {

let atManager: abilityAccessCtrl.AtManager = abilityAccessCtrl.createAtManager();

// requestPermissionsFromUser会判断权限的授权状态来决定是否唤起弹窗

atManager.requestPermissionsFromUser(context, permissions).then((data) => {

let grantStatus: Array<number> = data.authResults;

let length: number = grantStatus.length;

for (let i = 0; i < length; i++) {

if (grantStatus[i] === 0) {

// 用户授权,可以继续访问目标操

} else {

// 用户拒绝授权,提示用户必须授权才能访问当前页面的功能,并引导用户到系统设置中打开相应的权限

return;

}

}

// 授权成功

}).catch((err: BusinessError) => {

console.error(`Failed to request permissions from user. Code is ${err.code}, message is ${err.message}`);

})

}

private _started = false

async startSpeech(){

console.log("cocos info start asr" )

let grantStatus: abilityAccessCtrl.GrantStatus = await checkAccessToken(permissions[0]);

if (grantStatus !== abilityAccessCtrl.GrantStatus.PERMISSION_GRANTED) {

console.info("speech"+grantStatus)

this.reqPermissionsFromUser(permissions, this._context);

return;

}

this.onStart();

}

onStart(){

this._started = true;

this._id++;

// 设置开始识别相关参数

let recognizerParams: speechRecognizer.StartParams = {

sessionId: this._id+"",

audioInfo: { audioType: 'pcm', sampleRate: 16000, soundChannel: 1, sampleBit: 16 },

extraParams:{"recognitionMode": 0 }

}

// 调用开始识别方法

this.asrEngine.startListening(recognizerParams);

}

onEnd(){

if(!this._started) return;

this.asrEngine.finish(this._id+"");

this._started = false;

}

}

//let asr = new asrCtrl();

//export default asr as asrCtrl;

请问是不是我从cocos那边调用globalThis.oh.postSyncMessage(msgType, msgData),

然后cocos的worker uiport会通过const result = await uiPort.postSyncMessage(msgType, msgData)转发给鸿蒙的worker workPort,

最后鸿蒙的worker workPort拿到需要的数据后会通过this.workPort.postReturnMessage(data, result)返回给cocos的worker uiport

完成一次cocos与鸿蒙的交互呢

大佬,没看懂,如果我ts里面Manager脚本有个方法叫test(),我鸿蒙里面应该咋写0.0

Cocos2dxJavascriptJavaBridge.evalString(“cc[“Manager”].test()”);有类似于google这样的吗

globalThis.startMic(text);这一句看着像,但是这个startMic在ts里面是咋写的

你好,感谢你之前给我的回答,我在阅读工程代码时有发现对于cocos与鸿蒙交互的痕迹,你可以在devceo studio中在工程中全局搜索window.oh.postSyncMessage,在jsb-engine.js中会有一个对于video的"currentTime"的交互实现,这是一个同步的交互;异步的我还在观察window.oh.postMessage

谢谢,不过还是不懂

就是还是通过worker来实现,当你用在cocos的ts脚本中使用globalThis.oh.postSyncMessage(messageType,messageData)时,他会去在鸿蒙工程里的cocos_worker.ts里使用nativeContext.postSyncMessage,

这个函数里将这个事情交给了cocos的worker uiPort,然后uiPort把这个事情转给鸿蒙的worker。

这个鸿蒙的worker你在entry/src/main/ets/pages/index.ets里你可以看到,

它在aboutToAppear函数中有对于message的处理也就是在那处理完返回的。

我断点看的话它的表现就是这样

这块我知道,creator发消息给鸿蒙是 globalThis.oh.postMessage(“HiAdLog”, _str);现在就是不知道鸿蒙到creator

如果是异步的交互,我当前也没观察到,官方用的postMessage都是在鸿蒙那里就处理结束了,没做什么返回给cocos的操作